How simulation is helping to usher in tomorrow’s chips

By embracing comprehensive multi-physics simulation and leveraging AI to accelerate design processes, semiconductor companies can navigate the challenges of heterogeneous integration and deliver the computing platforms that will power the next wave of AI innovation.

By Jayraj Nair, Field CTO for Hi-Tech and APAC, Ansys

The semiconductor industry stands at a critical turning point. With global semiconductor sales reaching $57.8 billion in last year the need for the industry to scale has never been more apparent. Yet mounting physical and economic limitations threaten this growth. As AI applications drive unprecedented requirements for processing capabilities, chip designers are turning to advanced simulation technologies to enable the next generation of complex heterogeneous multi-die systems.

Today’s chips increasingly integrate specialised processing elements particularly Neural Processing Units (NPUs) for AI workloads - alongside Graphics Processing Units (GPUs), Central Processing Units (CPUs) and memory in sophisticated multi-die packages. It’s no secret that designing these systems requires understanding complex interactions across electrical, thermal, and mechanical domains that can only be predicted through comprehensive multi-physics simulation. As the industry embraces this approach, simulation has evolved from a verification tool to a central enabler of innovation, allowing designers to explore novel architectures that would otherwise be too risky to attempt.

Beyond traditional scaling

For decades, the semiconductor industry relied on transistor scaling to improve performance and efficiency. However, as process nodes approach physical limits, the cost benefit equation of scaling has shifted dramatically. The cost per transistor at advanced nodes is no longer decreasing and may even be increasing, pushing manufacturers toward alternative approaches to enhance system performance.

This reality has catalysed a fundamental shift away from simply scaling monolithic CPU and GPU designs. While GPUs revolutionised computing by enabling massive parallelism - first for graphics rendering and later for AI training - even these powerful processors face scaling limitations. With AI chips expected to grow by more than 30% in 2025, heterogeneous integration has emerged as the solution to ensure continued advancement. By combining multiple specialised dies - potentially manufactured on different process nodes - into a cohesive package, system architects can optimise each component for its specific function rather than compromise on a monolithic design.

NPUs represent one of the most significant specialised silicon developments for AI applications. These purpose-built processors contain architectures optimised for tensor operations with specialised data paths, memory hierarchies, and computational elements that dramatically accelerate neural network workloads compared to general-purpose processors. When integrated alongside CPUs for general computing and GPUs for parallel processing tasks, these heterogeneous systems deliver unprecedented computational capabilities for AI applications.

This shift toward heterogeneous integration introduces new design complexities that can’t be addressed through traditional prototyping methods alone. As these systems become more intricate, simulation has emerged as the critical enabler, allowing engineers to validate designs before committing to expensive silicon implementation.

Multi-physics challenges in NPU-based systems

The design of heterogeneous multi-die systems with NPUs introduces unprecedented complexity across multiple domains. Traditional simulation approaches that treat electrical, thermal, and mechanical phenomena as separate concerns simply won’t cut it for these highly integrated systems where interdependent physical effects significantly impact performance and reliability.

Power delivery networks and thermal management systems must be analysed holistically, as electrical performance affects thermal profiles while heat dissipation impacts electrical performance in a continuous feedback loop. This interdependency is particularly critical for NPUs, which can experience dramatic power fluctuations during different computational phases. Similarly, high-bandwidth, low-power interfaces between dies demand detailed electromagnetic analysis to ensure signal integrity while operating within increasingly tight power constraints – a challenge that grows more complex as die to die communication speeds increase.

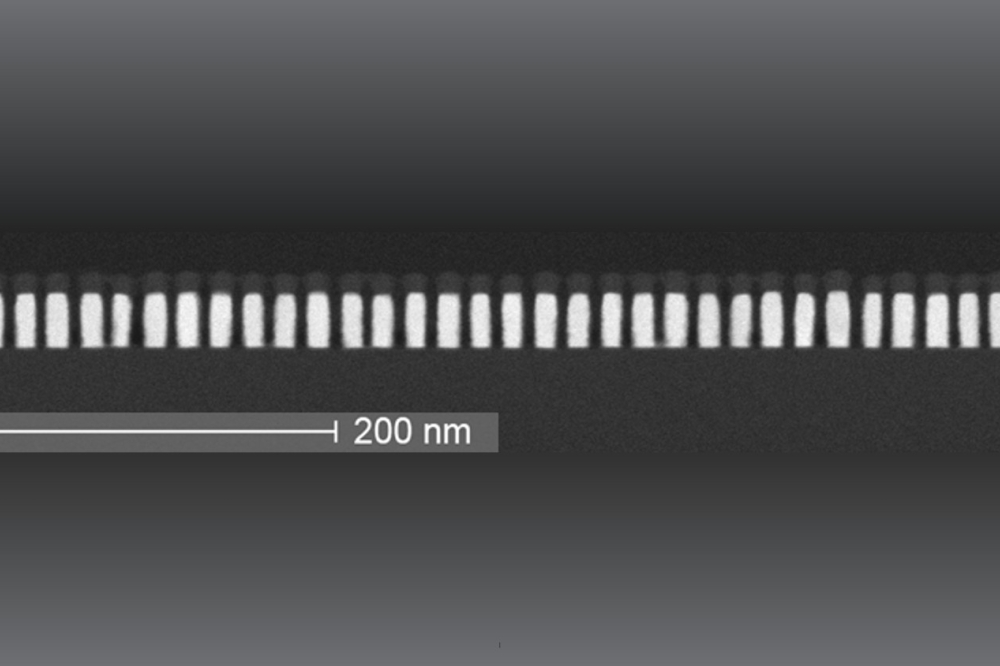

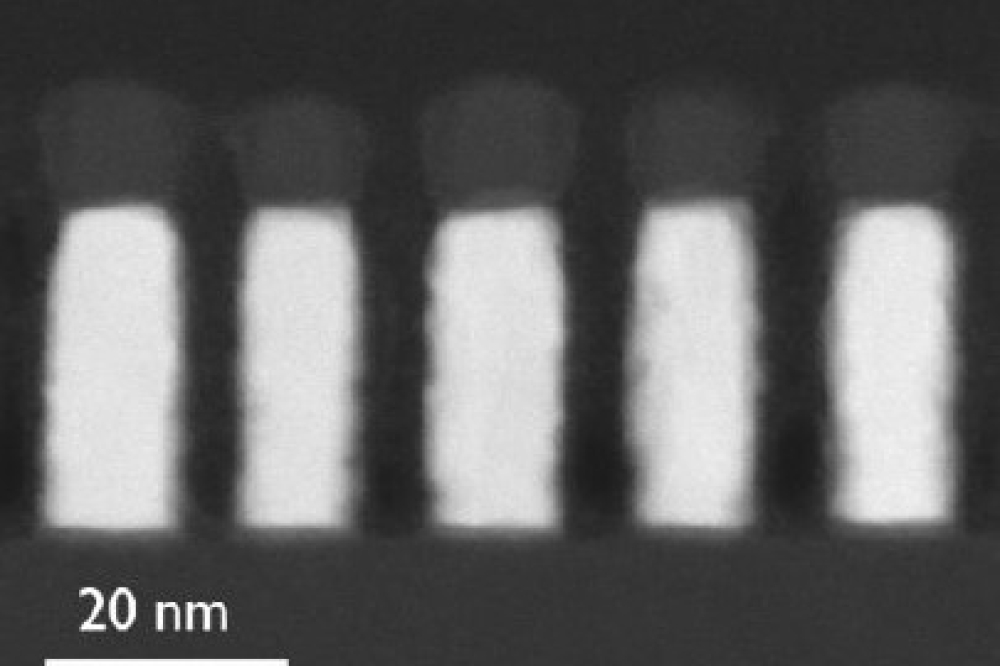

Multi-scale physics challenges have become increasingly important as system designs span from nanometer-scale transistors to centimeter-scale packages and beyond. This wide range of physical dimensions requires simulation tools capable of seamlessly transitioning between different scales while maintaining accuracy and computational efficiency. Additionally, as optics and electronics converge in modern systems, thermal stability challenges with co-packaged optics have introduced yet another dimension of complexity requiring sophisticated multi-physics modeling approaches.

The complexity extends to power integrity across multiple domains, as NPUs and other specialised processors typically operate with different voltage levels and power requirements. This necessitates sophisticated power delivery network analysis to prevent voltage droops that could compromise system stability. Mechanical stress presents another significant challenge, as the complex structures in advanced packages experience thermal expansion and contraction during assembly and operation that can affect both reliability and electrical performance through stress-induced parameter shifts.

Beyond these component-level concerns, predicting overall system performance under realistic workloads has become essential for optimising heterogeneous architectures. These limitations have driven the semiconductor industry toward more sophisticated simulation approaches that can address the multifaceted nature of modern chip design.

Advanced simulation methodologies

Modern simulation methodologies for heterogeneous systems are evolving toward unified, multi-physics approaches that capture the complex interactions between different physical domains. Co-simulation frameworks have emerged as particularly valuable tools, enabling simultaneous analysis of electrical, thermal, and mechanical phenomena with bidirectional coupling of results. In these environments, power distribution analysis feeds directly into thermal simulation, which in turn affects electrical performance through temperature-dependent parameters, creating a more realistic model of the system’s actual behaviour under operating conditions.

To manage the computational complexity of these multi-physics problems, Domain Decomposition Methods (DDM) have become increasingly important. These techniques strategically divide complex problems into multiple smaller, manageable subdomains that can be solved independently and then combined, substantially improving the capability to solve multi-domain, multi-physics large-scale problems efficiently without sacrificing accuracy. This approach is particularly valuable for heterogeneous systems where different components may require different levels of simulation fidelity.

Time-to-market pressures have become a critical factor, particularly in competitive sectors like automotive, where manufacturers struggle to meet the growing demand for sophisticated silicon components. Advanced System Architecture Modelers now enable engineering teams to adopt a shift left approach, allowing earlier validation of system-level performance and accelerating development cycles by identifying potential issues before committing to silicon implementation. These tools support new ways of working that break down traditional silos between different engineering disciplines, fostering collaborative environments where thermal, mechanical, and electrical experts can work concurrently rather than sequentially.

The integration of machine learning into the simulation workflow represents another significant advancement. AI-based methods can accelerate simulation by training models on existing results, allowing engineers to quickly explore design spaces without running full-scale simulations for every configuration.

These advances in simulation technology have enabled comprehensive package analysis for the complex 2.5D and 3D packaging configurations that are increasingly common in NPU-based systems. Modern tools can now model through-silicon vias, redistribution layers, and embedded cooling technologies with high fidelity, providing accurate predictions of system performance before physical prototyping.

Die to die interfaces: Critical enablers of NPU integration

The interfaces between dies in heterogeneous systems represent both a key enabling technology and a significant design challenge. High-speed die to die communication is essential for NPUs to efficiently access memory and exchange data with CPUs and other system components. The industry is pursuing several promising technologies to address these connectivity requirements, each with distinct advantages for specific use cases.

Advanced packaging with silicon interposers provides high-density interconnects between dies, enabling wider data paths with shorter trace lengths that significantly reduce latency and power consumption. This approach has proven particularly valuable for connecting NPUs to high-bandwidth memory, where wide data paths are critical for computational performance.

For even greater integration density, hybrid bonding technologies that create direct copper-to-copper connections between dies enable extremely high interconnect densities, supporting the massive parallel data movement required by modern NPU architectures.

Looking toward future systems with more distributed processing, optical interconnect technologies based on silicon photonics promise higher bandwidth with lower power consumption than traditional electrical interconnects, particularly for longer-reach connections between chiplets. Simulating these advanced interfaces requires specialised tools that can accurately capture high-frequency electromagnetic effects, crosstalk between densely packed traces, and the impact of process variations on signal integrity.

Thermal management and power integrity

As heterogeneous systems integrate high-performance NPUs alongside traditional processors, thermal management becomes increasingly challenging. NPUs can generate significant heat during intensive AI workloads, creating localised hotspots that significantly exceed the average power density of the cooling system. This challenge is compounded by thermal coupling between dies, where heat generated by one component affects adjacent dies, creating complex thermal profiles that must be analysed holistically.

The dynamic nature of AI workloads further complicates thermal management, as these applications often result in variable power consumption patterns that create transient thermal conditions requiring time-dependent analysis. To address these challenges, engineers are developing advanced cooling solutions including embedded cooling channels and vapor chambers that require detailed modelling to predict performance across various operating scenarios.

Power delivery is equally critical in heterogeneous NPU systems. These specialised processors often require precise voltage regulation under rapidly changing load conditions to maintain performance and prevent errors. Heterogeneous systems typically require distributed power delivery networks to support multiple dies with different voltage requirements, while the dynamic load profiles characteristic of NPU operation can stress power networks and create voltage fluctuations. Many NPU architectures operate at reduced voltage levels to maximise energy efficiency, providing minimal margins for transients and droops and making accurate simulation of the power delivery network essential.

Embracing AI to accelerate simulation and design

The computational demands of simulating heterogeneous NPU systems have become increasingly challenging as designs grow in complexity. Machine learning methods have emerged as valuable tools for accelerating complex simulations while maintaining acceptable accuracy. By training surrogate models on results from detailed physics-based simulations, engineers can rapidly predict system behaviour across numerous design variations without running full electromagnetic or thermal simulations for each configuration.

Beyond simulation acceleration, AI-enhanced design flows can play an increasingly important role in guiding decisions based on accumulated knowledge from previous implementations. Machine learning systems can identify patterns in successful designs and flag potential issues before they become costly problems, effectively capturing and applying institutional knowledge across projects.

Unified design methodologies

As heterogeneous integration with NPUs becomes the dominant paradigm for high-performance computing, the industry is moving toward unified design methodologies that span multiple physical domains and packaging levels. Standardisation of interface files represents a critical step toward more unified workflows, allowing different simulation tools to exchange information and enabling comprehensive cross-domain analysis.

Equally important is the development of secure methods for sharing geometric and physical data without exposing proprietary design details. As heterogeneous systems increasingly incorporate components from multiple suppliers, the ability to exchange essential physical characteristics while protecting intellectual property becomes crucial for enabling better multi-component analysis.

Standardised specification of compliance for interfaces between heterogeneous components will also streamline the integration process and reduce compatibility risks. Unified approaches to specifying and verifying the performance of die-to-die connections, thermal interfaces, and power delivery characteristics establish clear expectations for component suppliers and system integrators.

The transformation of the semiconductor industry requires not just technological evolution but a parallel evolution in workforce capabilities. As designs become more complex and interdisciplinary, the industry faces a critical skills gap that threatens to impede progress. Tomorrow’s semiconductor professionals need to adopt broader thinking patterns that cross traditional domain boundaries, embracing both deep expertise and systems-level understanding. Companies must invest in upskilling and reskilling their workforce to meet these new demands, fostering environments where electrical engineers understand thermal implications and mechanical engineers appreciate signal integrity concerns. This workforce transformation is just as essential as technological advancement for addressing the semiconductor industry’s mounting challenges.

The fact is, by embracing comprehensive multi-physics simulation and leveraging AI to accelerate design processes, semiconductor companies can navigate the challenges of heterogeneous integration and deliver the computing platforms that will power the next wave of AI innovation. As these simulation capabilities continue to mature, they will enable increasingly sophisticated architectures that deliver the computational power needed for next-generation applications while effectively managing system constraints.